Article Summary:

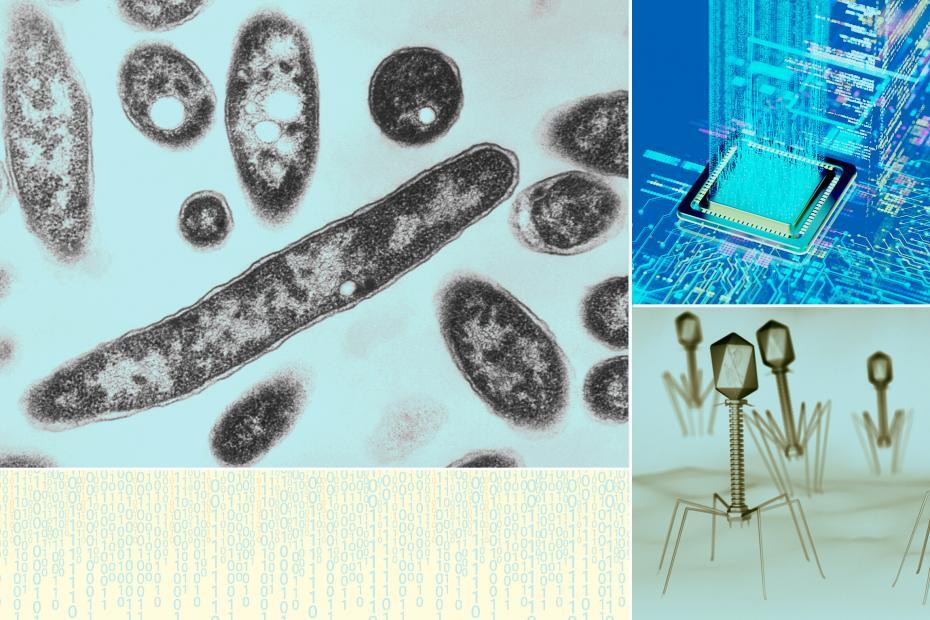

Scientists at Stanford University have demonstrated that artificial intelligence can now design and create functional viruses, specifically bacteriophages, by using genomic language models trained on existing viral DNA. In their experiments, the AI-generated viral genomes were successfully created in a laboratory and tested on E. coli, with some variants proving even more potent than natural viruses.

While the potential benefits are significant—such as developing treatments for antibiotic-resistant bacteria and creating targeted therapies—the risks are equally profound. The same technology that can design helpful viruses could potentially be used to create dangerous pathogens targeting humans.

Traditional biosecurity strategies focused on prevention are now inadequate. Existing screening systems cannot detect novel, AI-generated viruses, and algorithmic information can spread rapidly online.

The article recommends a three-pronged approach to address these emerging challenges.

The core message is that resilience, not prevention, is now the key strategy. As AI’s capabilities in biological design accelerate, the United States must develop systems that can rapidly respond to emerging biological threats, recognizing that the timeline between design and potential disaster has dramatically compressed.

Article Excerpt:

“For years, experts have warned that generative biology could collapse the timeline between design and disaster. That moment has arrived. The viruses created in the Stanford experiment were harmless to humans. The next ones might not be.”